One of the trade-offs of today’s technological progress is the big energy costs necessary to process digital information. To make AI models using silicon-based processors, we need to train them with huge amounts of data. The more data, the better the model. This is perfectly illustrated by the current success of large language models, such as ChatGPT. The impressive abilities of such models are due to the fact that huge amounts of data were used for their training.

The more data we use to teach digital AI, the better it becomes, but also the more computational power is needed.

This is why to develop AI further; we need to consider alternatives to the current status quo in silicon-based technologies. Indeed, we have recently seen a lot of publications about Sam Altman, the CEO of OpenAI, talking about this topic.

The Problem of CO2 Emission

A lot of effort today is put into limiting energy consumption and CO2 emissions in different industry sectors. This can be done by creating new hardware for existing high energy-consuming devices. One good example is the work on electric cars. However, media coverage is not always focused on the right problems. In this case, we do not always pay attention to the ‘real’ biggest producers of CO2 but focus on smaller cases which are closer to our everyday life and therefore easier to understand, such as cars, plane flights, and household devices – these cases are easy to understand but not necessarily the biggest causes of high CO2 emissions worldwide.

AI models trained on digital hardware require a lot of energy and therefore can become significant CO2 emitters – we can already see that building one model using current digital technologies can produce CO2 equivalent to five cars in their lifetime

AI is not currently biggest contributor of CO2 emissions but considering the boom in large AI models – which are based on processing enormous amounts of data, we can expect that the problem of CO2 production by data centres will be increasing very fast. This is why it is so important not to rely on the future of AI solely on energy-expensive digital computation.

The development of AI cannot be stopped just by saying ‘it uses too much energy’ and applying regulations – as there will always be a place on the planet where such regulations can be overcome. Therefore, the countries which inhibit the development of technology will remain behind the countries in which such regulations are less strict. Losing the lead in technology development can have enormous strategic costs. Therefore, western countries, which are leading the initiative to lower CO2 consumption, need to address the problem in a different way. Namely, to encourage the development of energy-efficient tools, as the best route to reduce CO2 emissions, instead of limiting the development and usage of current AI models.

Possible Solutions

As believed by many experts, we can solve this problem from two different angles:

- Find alternative ways for energy production so that we can keep increasing the usage of our silicon-based servers. Top alternatives include fusion, geothermal, and nuclear energy, however these energy sources are either very immature or they cause significant public concerns regarding safety.

- Unconventional computing, which is a field exploring new ways of computing, both for hardware and software. An example of unconventional computing is biological computing. This is a new field in which living neurons are used to perform computations and they are potential new generation processors for the future biological computers.

We believe that unconventional computing is the best way to reduce CO2 emissions.

Energy efficiency of biological computing

Biological computing is a field in which computers are constructed from living neurons.

One of the biggest advantages of biological computing is that neurons compute information with much less energy than digital computers. It is estimated that living neurons can use over 1 million times less energy than the current digital processors we use. When we compare them with the best computers currently in the world, such as Hewlett Packard Enterprise Frontier, we can easily see that for approximately the same speed and 1000 times more memory, the human brain uses 10-20 W, as compared to the computer using 21 MW.

This is one of the reasons why using living neurons for computations is such a compelling opportunity. Apart from possible improvements in AI model generalization, we could also reduce greenhouse emissions without sacrificing technological progress.

What are the challenges?

Of course, using living neurons to build new generation bioprocessors is not an easy task. Despite all the advantages, such as energy efficiency, scalability, and proven ability to process information, bioprocessors from living neurons are hard to develop.

We still do not know how to program them. Contrary to digital computers, biocomputers are real black box. For this reason, we need a lot of experimentations to make them work. But if we find the way to control those black boxes, they can become truly powerful tools for computing.

Computations on Living Neurons at FinalSpark

FinalSpark is on a mission to contribute to this. We are building bioprocessors from living neurons connected to electrical hardware to transmit information.

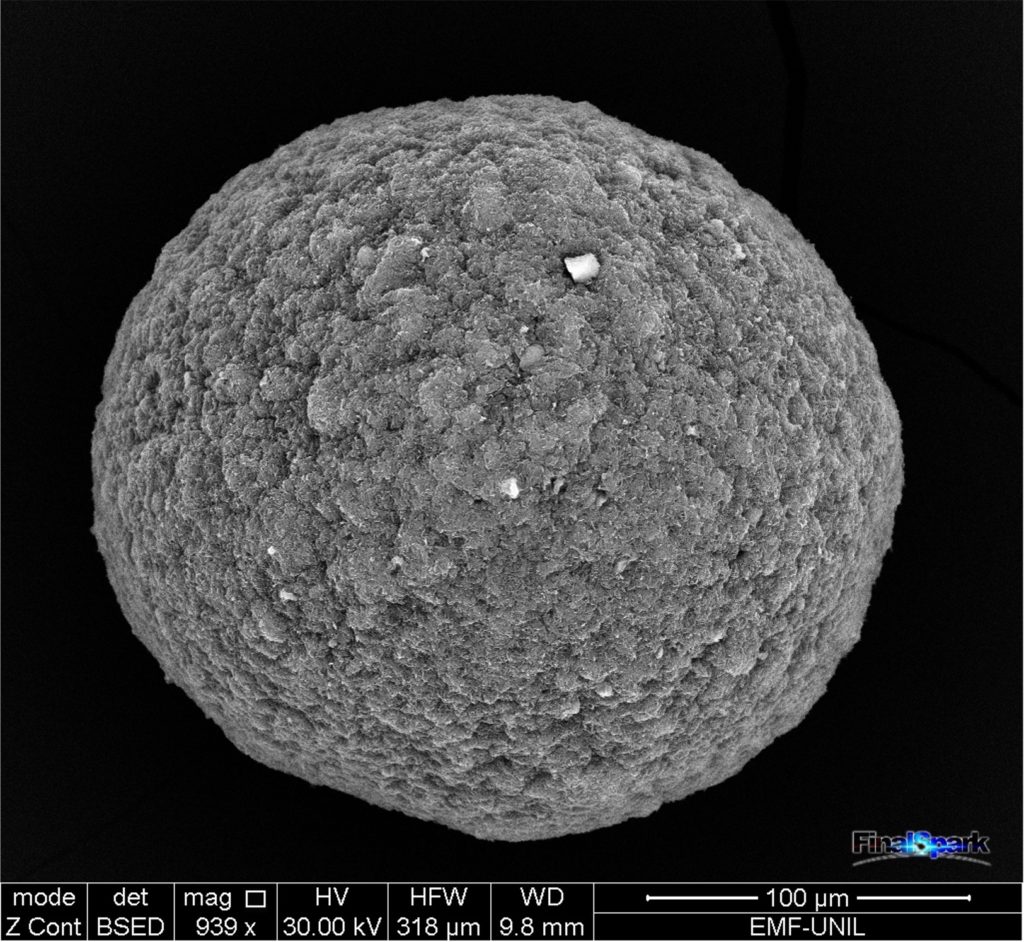

This is a brain organoid. A living structure built from an estimated 10 thousand living neurons, about 0.5 mm in diameter. FinalSpark labs are using human neurons derived from iPSC cells from human skin to build neurospheres. Neurospheres are used for biomedical research, to study brain diseases, and to better understand how the human brain works. At FinalSpark lab, we use them for computations. This is a purely non-medical, engineering objective, to construct a new type of computer processors.

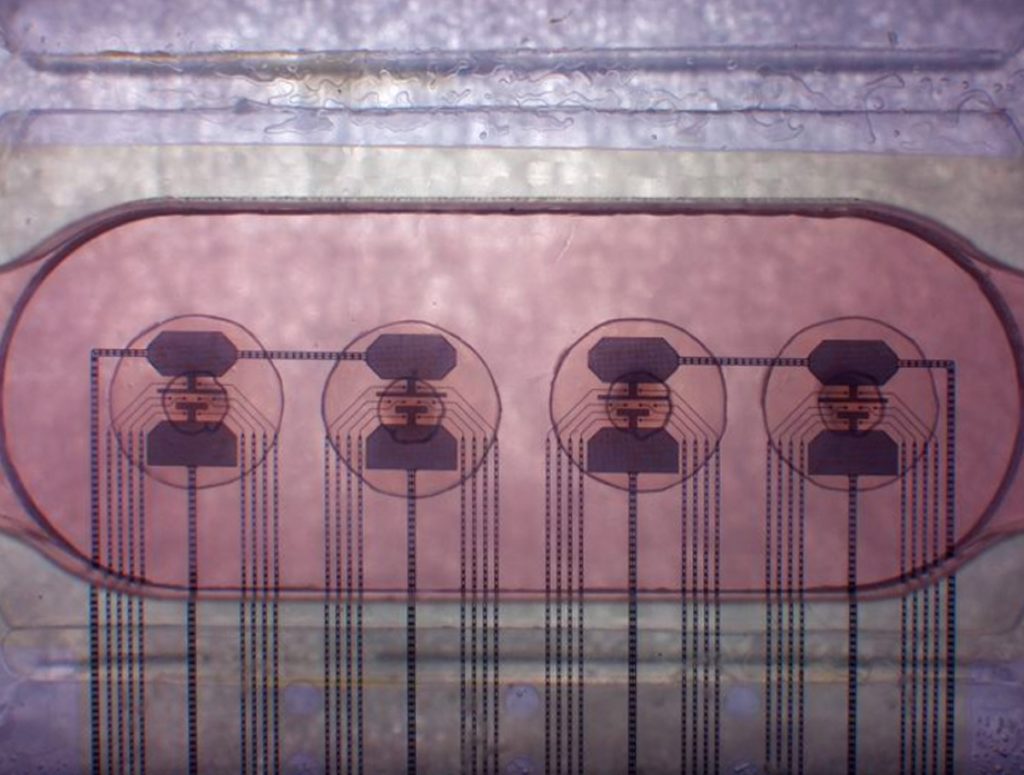

Neurospheres are kept on electrical devices to allow sending and receiving electrical signals to and from neurons:

This kind of equipment has been used for years for research in electrophysiology for biomedical purposes. Indeed, when we established FinalSpark lab, we learned the techniques from biomedical researchers, under the lead of Prof. Luc Stoppini, our current scientific adviser, from Hepia institute.

To maintain optimal conditions, we keep our living neurons in incubators at 37 °C.

Cells are kept sterile, and all operations on them are performed in an environment which is filtered to protect against any contamination, such as bacteria or viruses:

Behind the glass of the laminar-flow hood, in air filtered from any living organisms, we can work with living cells, to keep them healthy and sterile.

This is how the FinalSpark Team aims to contribute to the problem of greenhouse gas emission.

We hope that with our work we will be able to create biocomputers, which will revolutionize not only technology but also the energy sector and will contribute to the reduction of CO2 emissions without compromising the progress of technology.